Assignment 3: Gradient Boosting (Fashion MNIST)

Gradient Boosting代写 Submitting a text entry box or a file upload Extended Deadline: Sunday, Nov 15th midnight.What to Upload: Report, and Source code···

Points 100 Gradient Boosting代写

Submitting a text entry box or a file upload Extended Deadline: Sunday, Nov 15th midnight.

What to Upload: Report, and Source code file.

Title: Decision Tree for Fashion MNIST image classification

Goal: Develop a Gradient Boost based Decision Tree classifier to classify cothing items in the Fashion MNIST dataset.

Dataset: Use the Fashion MNSIT database downloaded for Assignment-1 (kNN classification problem). Alternately, it can be downloaded again from here (https://drive.google.com/open? id=1FHrIMthmGaVzdmxrPs-YMs4t0_6QjjIt) . Two CSV files are provided as training set and test set. Each of these files contains 60,000 and 10,000 observations respectively representing the encoded values of 28×28 grayscale images.

Each image observation is encoded as a row of 784 integer values between 0 and 255 indicating the brightness of each pixel. The label, identifying the clothing item type, is encoded as an integer value between 0 and 9. The label column is positioned as first column in each file.

0: T-shirt/top 1: Trouser 2: Pullover 3: Dress 4: Coat

5: Sandal 6: Shirt 7: Sneaker 8: Bag 9: Ankle boot

Task: Gradient Boosting代写

- Data Sampling: Create random subset of data from both training and test files separately such that 30% of observations under each clothing label {for labels 0, 1, 2, …,9} are included in the respective subset. Note that the training set has 60,000 observations but actual distribution of clothing items may not be 6,000 for each clothing type. Use these subsets dataframes files for your analysis.

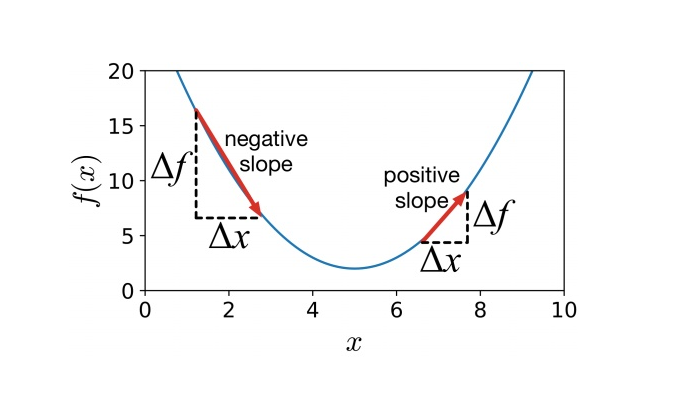

- Prediction Model Development & Validation: Train decision tree using two R library (i.e., C50, and xgboost) on training sample and evaluate the tree model on test sample. Note that C50 implements Adaptive Boosting (AdaBoost) which can be activated by specifying the parameter “trials”. Whereas XGBoost implements exteme gradient boosting.

Develop decision trees using both C50 and XGBoost. Gradient Boosting代写

Experiment with different model parameters to produce the best version of decison trees under each package. Construct confusion matrix for modeling approach (i.e., C50, and XGBoost) and compare the resuults with the confusion matrix for kNN algorithm assignment you submitted.

- Report: Write a report that includes: [a] brief introduction to the topic of Decision tree and Boosting methods. [b] key steps taken to train and evaluate decision tree based classifier. And [c] briefly discuss the key findings,i.e., rule sets, accuracy, confusion matrix, and other relevant performance metrics. Compare the result of each boosting approach with kNN classifier developed/reported in assignment-1.

更多其他:代写作业 数学代写 物理代写 生物学代写 程序编程代写 文科essay写作